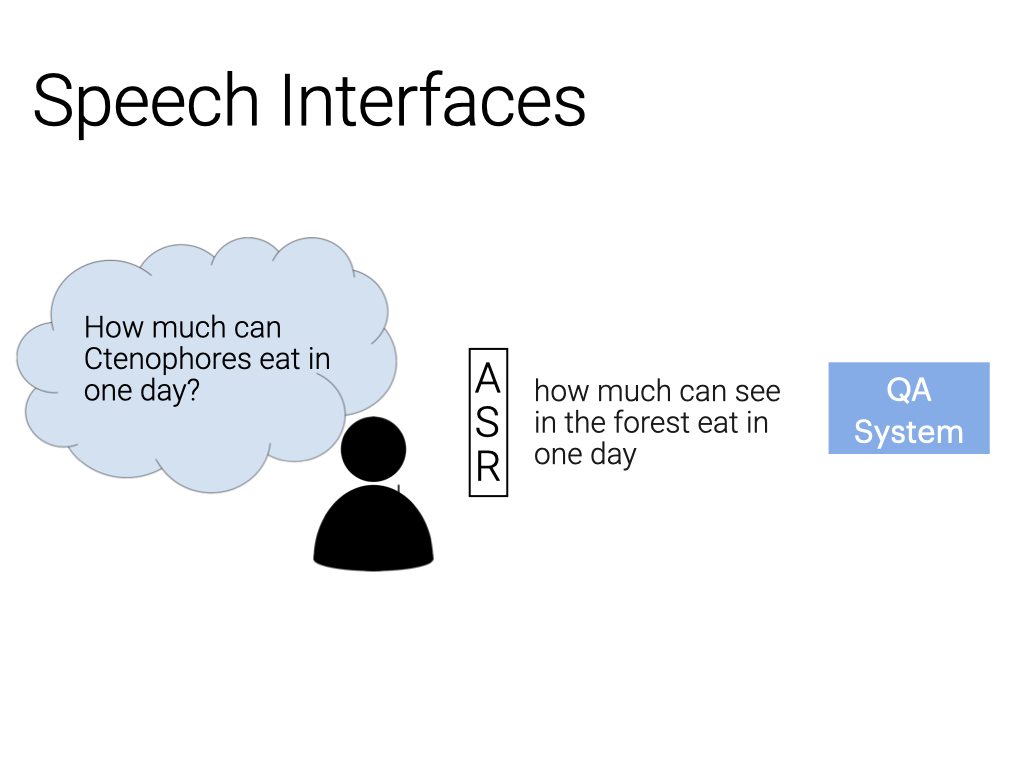

Speech Interfaces

ASR noise simulates the errors introduced when users interact with QA systems through a speech interface. This type of noise became more prominent with the advent of voice assistants, and practitioners need to handle such errors to accommodate the users who rely on voice input.

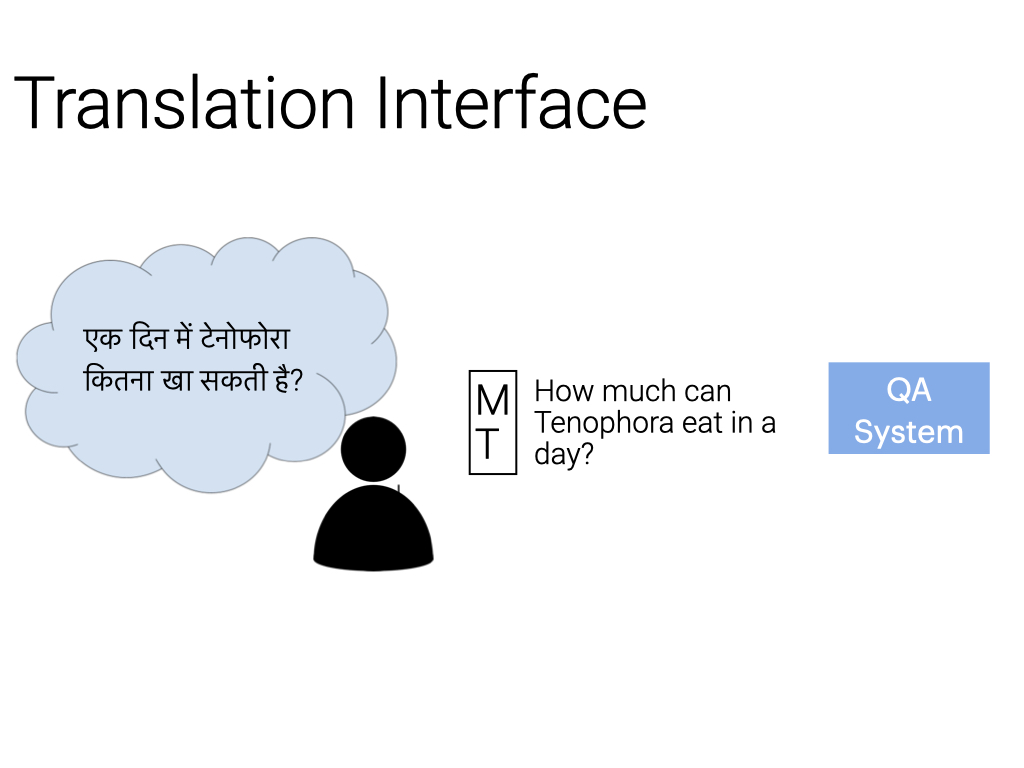

Translation Interfaces

Translation noise replicates the effect of users passing their questions through a translation system. For many languages, reference information for a particular query might not be readily available online, so users may employ a machine translation engine to interact with a QA system built for another language.

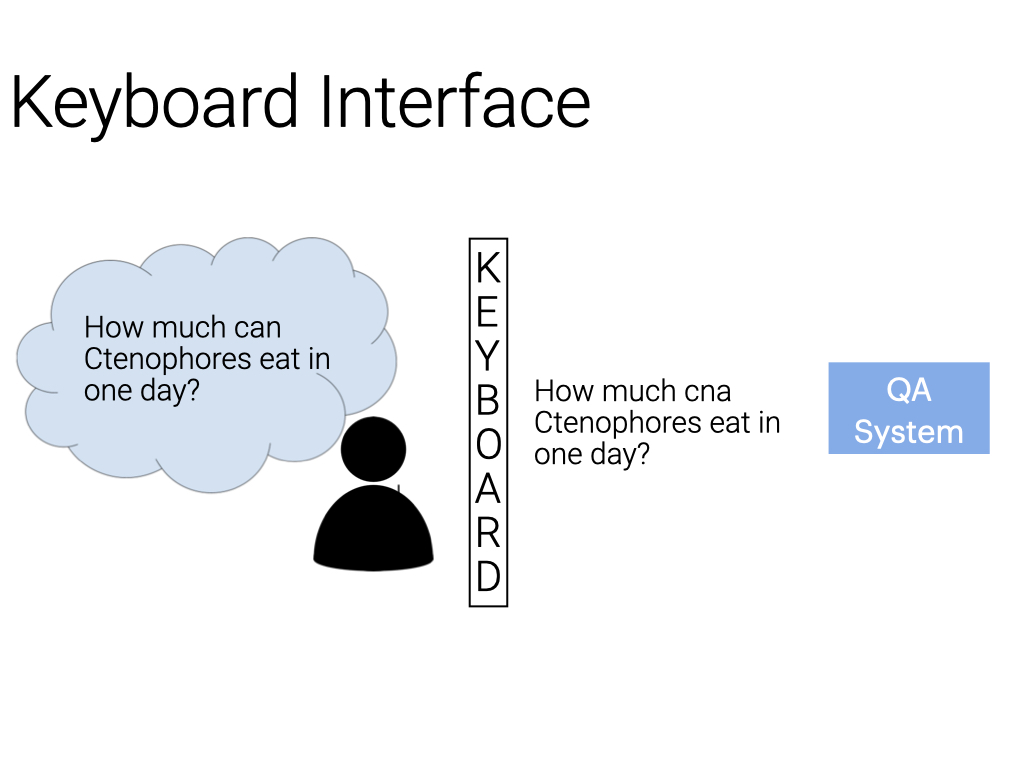

Keyboard Interfaces

Keyboard noise refers to the errors that originate in the process of typing—specifically, we focus on misspellings caused by keyboard layouts rather than user misconception. These typos might present a challenge when queries are entered into a search engine, and we find that a simple spellchecker might not be enough to combat them.